-

Biking along creeks

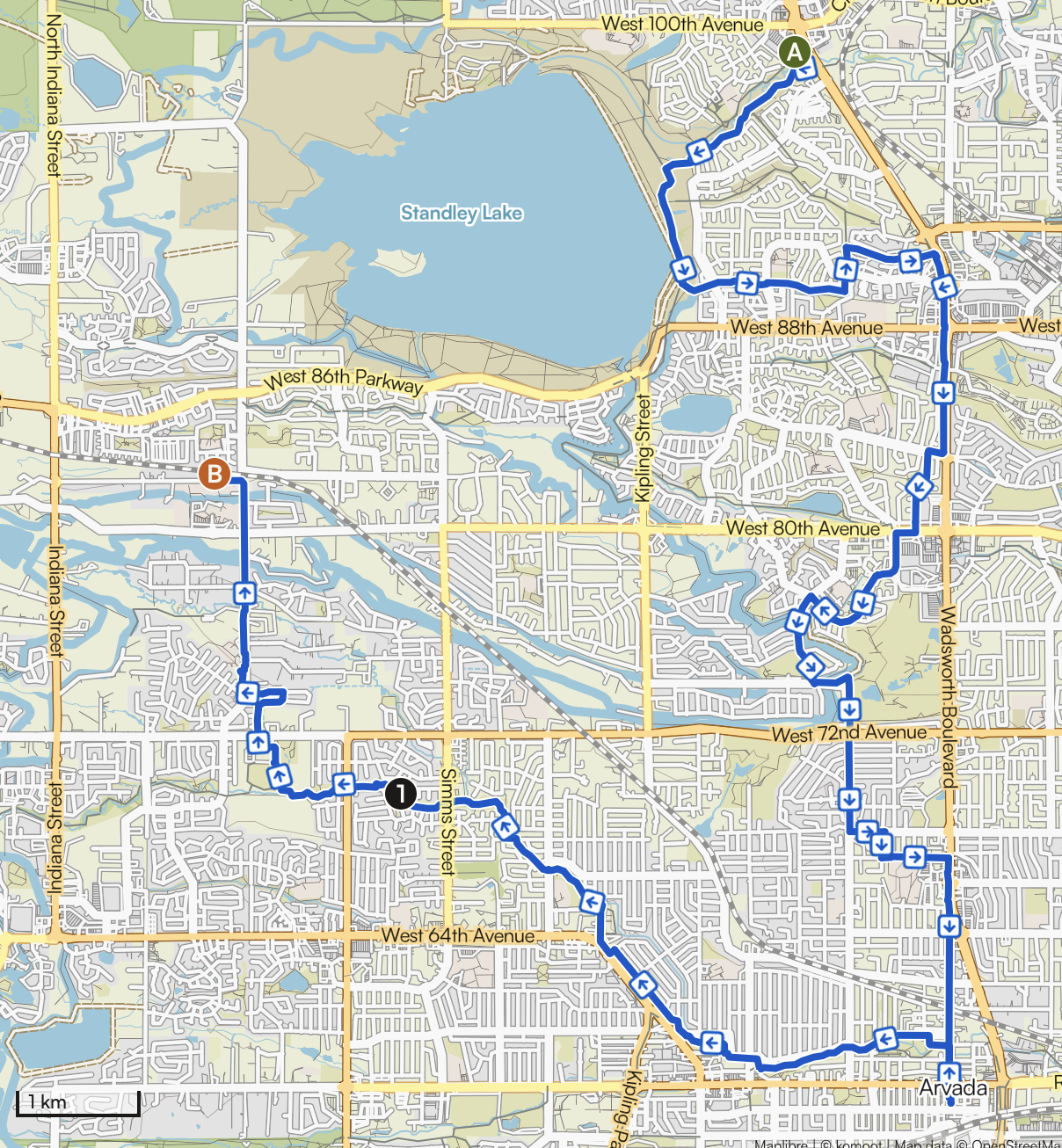

Over the past month I have taken this route three times. It takes 2-2.5 hours, depending on how hard I pedal. The first two times I headed out going east and returned west. The last time I did it in reverse (by accident really, I just started going that way out habit for some reason).…

-

Big Dry Creek trail

Today was quite nice weather again, high around 7C (45F) and sunny, so I decided to take a walk again. I had some packages to return to Amazon, so Clare dropped me off around 11 a.m. at the UPS store, and I walked home. I started off on the Big Dry Creek trail towards Standley…

-

Standley Lake

I have continued to spend a good portion of my weekends walking and exploring. Three weeks ago I decided to try to walk all the way around nearby Standley Lake. I discovered it is not possible to do so on trails, because a large portion of the area is a bald eagle breeding ground reserve.…

-

Little Dry Creek Trail

Two weeks ago Saturday I decided I wanted to take a long walk on the weekend. It was only about 5C, but sunny, so I figured it would be fine. I bundled up in my coat and thermal jeans. I wasn’t 100% certain where I would go. I am trying to get to know my…

-

Power walking

No, it is not what you are picturing. I did not walk extra briskly wearing a jogging outfit today. Rather, I walked 1.2 miles to the supermarket and back. The way back is mostly uphill, and I had my backpack full with 10 cans of chickpeas (because they were on sale for 10/$10) and a…